Models and sensor data: a powerful combination

Models and sensor data are an indispensible combination. Sensor data is needed to validate models and the models are often necessary to understand and extrapolate the data. VORtech has been working a two very exciting projects in the past few months in which sensor data and models are combined in an innovative application.

Finding leakages

The first application in which we combine sensor data with a model is for finding leakages in water distribution networks. VORtech built a demonstrator for the water company Vitens which is capable of finding simulated leakages in the distribution network of Leeuwarden, using only a few pressure sensors and flow sensors.

The idea is simple enough: construct a computer model of the distribution network and assume a leakage of unknown size in each pipe. Then calibrate the model by adjusting the leakage sizes until it matches the observations. In prinicple, this should find a zero leakage size in every location except for the actual leakage location.

Experimenting with algorithms

Ruurd Dorsman from VORtech built the demo application. Ruurd: “Vitens uses the Epanet software for certain applications about their network. Epanet is an open-source package for the simulation of distribution networks. For our experiments, we have coupled Epanet to OpenDA. That is an open-source framework for combining models and sensor data. We develop it together with several other parties. It has proven itself in a large number of demanding applications. Once we had coupled Epanet to OpenDA, we could go and try all the algorithms in OpenDA.

To see if the idea was at all feasible, we started with a brute-force approach: simply try all combinations of potential leakages and then select the one that best matches the observations. This provided a lot of insight. Ruurd: “we saw that there were multiple combinations that all gave results that sort of match the sensor data. But the right one gave by far the best match. So that was hopeful.”

In reality, it is impossible to compute all possible combinations of potential leakages as the number of pipes in a network is usually very large. Therefore, we tried another OpenDA algorithm. That one had actually been developed for a different kind of application so it took some additional development to get it working. In the end, it all worked but it tended to find the wrong solution too often. Therefore, Ruurd has now developed a different algorithm that seems to work much better.

Testcase

The next step will be to try this algorithm on an actual real test case. A major leakage in Leeuwarden in 2013 seems an excellent case. As the application will eventually be running at Vitens, we switched to Python, which is better supported by Vitens’ IT-department. That means that we can no longer use OpenDA, because the Python interface for OpenDA is still under construction. That is no problem for Ruurd: “Python is my preferred programming langue so it is even better for me. Still, the first steps with OpenDA were essential. It was great to be able to try out various algorithms. Also, when we started there was no Python interface for Epanet. Coupling Epanet to OpenDA was in fact easier than using it with Python.”

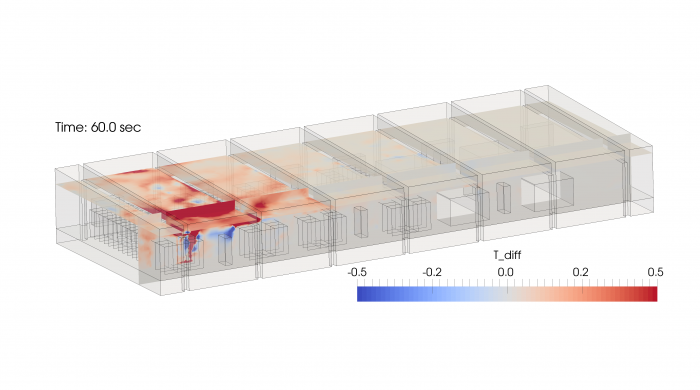

A complete temperature image for datacenters

The second project that combines sensor data and a model is the 4DCOOL project. This project develops an application that shows the actual air flow en tempeature distribution in the data center in real time. Such information will help datacenters to optimize their cooling. As cooling is currently a major part of the operational costs, the business case for the application is easy.

Perf-IT, a company from Sliedrecht in The Netherlands is one of the partners in the project. Perf-IT supplies systems for the management of data centers (DCIM, Data Center Information Management). René de Theije from Perf-IT sees the advantages of 4DCOOL. René: “Currently flow models are hardly used use in data centers. They are expensive to build and the usually the results do not match the real situation. Therefore, the operators set the cooling based on gut-feeling. And most of the times that is far from optimal. Even more so because operators prefer to stay on the safe side anyway. If we can offer them a reliable real-time flow model, they get a powerful tool to find the optimal setting without having to do risky experiments.”

CFD and sensor data

Central to 4DCOOL is a computer model that computes the air flow, pressure and temperature in a data center room. This model is based on OpenFOAM, an open-source package for computational fluid dynamics (CFD). Project partner Actiflow used it to create a relatively coarse model that is fast enough for real-time computations but still accurate enough. Even so, this model will always deviate from the real situation as it will not have the right input data or because it cannot model the flow properly or because something changes in the real world.

That is what makes 4DCOOL so special. It constantly steers the computer model towards the lates observations while respecting the physics. This way, the model will eventually match the actual situation in the room.

The technology to steer a model towards sensor data is a specialty of VORtech. We do this on the basis of OpenDA. That is an open source framework that we are developing together with several other parties. OpenDA contains different techniques to combine sensor data and models. It has proven itself in a large number of demanding applications.

Using OpenDA, the computational core of 4DCOOL could be developed quickly. This showed that the idea as such works. But unfortunately, the techniques in OpenDA take too much computational effort for the large CFD models that are needed. Therefore, it doesn’t work in real-time.

A new method

Fortunately TNO had developed a method that would be fast enough. TNO actually started the 4DCOOL project because they thought that data centers would offer a good business case for their algorithm. Project lead Martijn Clarijs: “We also considered other applications, like in horticulture. But the data center market is more interesting as the advantages there are larger and there is more room for investment. Also, data centers are easier that greenhouses because plants complicate the computations. Fortunately, there was not yet a system like 4DCOOL for data centers.” René de Theije van Perf-IT adds to that: “There are systems that use the correlation between sensor readins and the cooling settings. It seems to work but it doesn’t help you when something big changes in your data center. And it doesn’t give you the insight to do something fundamental.”

Martijn Clarijs from TNO says that they still had to do some major development on their algorithm to make it work in practice. Martijn: “Initially we got results that were totally wrong. It took a really big effort to find the problems. But now we have it working. It is not as flexible as the algorithms from VORtech, but it is much faster.

Martijn thinks that it was essential to try both approaches. “On the one hand we knew that VORech would get something working. So, we were certain that there would be something at least at the end of the project. And it helped to compare results and discuss them.”

Proof of Principle

The project is now finalized with a positive feeling. The principle has been demonstrated beyond any doubt. That makes it easier to now find the investment for a follow-up project. That should make the 4DCOOL system ready for the market.

Once again, the combination of sensor data and models has shown to open interesting opportunities.